Read the full research paper on IncRev SEO Research Community on Zenodo.org: INCREV Query Match Tool – Applying Sentence-BERT Algorithm to analyze Topical Authority for onpage content and link relevance. DOI: 10.5281/zenodo.17571849

Search engines and AI assistants don’t just count keywords anymore—they measure meaning. Our tool Query Match checks how closely two texts match in topic (using semantic vectorization and cosine similarity) and helps you rewrite weak paragraphs so your pages and links are clearly on-topic.

This article is a plain-English adaptation of our research paper on SBERT and Query Match.

What is Topical Authority?

Topical authority is the strength of your website (or a section of it) within a *specific subject area*. Instead of trying to be “good at everything,” you become the most complete, trustworthy, and practically useful source on a clearly defined topic (e.g., *industrial machine safety*, *EV battery recycling*, *vector embeddings for SEO*).

Why it matters

Modern search and AI systems don’t rely on keyword counts—they evaluate how well your content “covers a topic” and how consistently related pieces of content “support each other”. Sites that demonstrate deep, connected coverage of a niche often outrank broader, more “powerful” domains on those niche queries.

Topicality (T*) vs. Topical Authority

Topicality (often described as “T*” in industry analyses): How well a single page answers a specific query. In practice, this is equivalent to a semantic similarity score between the user’s intent and your page (what we measure with cosine similarity on embeddings using the QueryMatch Tool).

Topical authority: How well your whole cluster of pages demonstrates knowledge depth across the subject—definitions, methods, standards, FAQs, comparisons, troubleshooting, benchmarks, case studies, etc.

With strong topical authority, you can outrank competitors with higher general authority (older site, more links, more reviews/E-E-A-T signals) because, in your niche, you’re clearly the specialist.

How to build topical authority (the practical recipe)

1. Define the topic scope clearly (what’s in vs. out).

2. Plan a content cluster that covers all subtopics: fundamentals, procedures, tools/standards, comparisons, pitfalls, pricing, laws, “how-to,” and FAQs.

3. Write each page for high topicality (T*): optimize paragraph-level similarity scores to the target intent using embeddings (cosine similarity).

4. Interlink semantically: connect each article to its nearest hub and sibling pages so the cluster forms a tight semantic neighborhood.

5. Iterate with data: add missing subtopics, prune or rewrite low-similarity paragraphs, and keep the cluster consistent and up to date.

Using similarity score to engineer authority

We quantify topical proximity with a cosine similarity score (0.0–1.0) between embeddings:

- Use it to highlight topics that are close enough to your main theme and exclude ideas that drift too far.

- Optimize each article’s paragraphs until they cross a quality threshold (e.g., >0.50) relative to the target intent.

- Roll up page-level topicality across many related articles to strengthen the cluster’s overall authority.

Bottom line:

Topicality (T*) = page-level match to a query (semantic similarity).

Topical authority = cluster-level expertise demonstrated by many tightly related, high-similarity pages.

By measuring similarity and connecting a deep set of articles, you signal to Google and AI systems that you’re the go-to source in this subject—often outranking bigger sites on the queries that matter to your business.

Why meaning beats keyword counting

Imagine every paragraph as a dot on an invisible map. Paragraphs that mean similar things sit close together; different topics are far apart. That map is built by turning text into numbers (embeddings). We can then measure the angle between two embeddings—this is cosine similarity, a score from 0.0 to 1.0 that tells us how close in meaning two texts are.

What this changes for SEO:

• You win by covering the topic well (definitions, steps, examples, entities), not by repeating the same words.

• Links work best when the linking paragraph is truly about the same thing as your target page.

• Internal links should point to the nearest topical hub and the closest sibling pages.

What Query Match actually does

• Scrapes the target page and the source page (guest post, press release, or backlink).

• Splits them into paragraphs and creates sentence/paragraph embeddings with Sentence-BERT (SBERT).

• Calculates cosine similarity between the two pages (page↔page) and between their paragraphs (paragraph↔paragraph).

• Flags weak paragraphs and uses AI rewriting to raise their scores above a threshold you choose (e.g., 0.20 → 0.50+).

• Checks readability and reading level, so edits stay clear and on-brand.

How to read the scores (simple rule of thumb)

| Score | Action |

| Below 0.20 | Rewrite the content (off-topic) |

| 0.20–0.50 | Relevant—improve when you have time |

| Above 0.50 | Strong topical fit—keep as is |

(These thresholds help prioritize work and align link context with your target page.)

Case study: Why “topical fit” can beat raw authority

In competitor checks, we often see a strong link between ranking position and similarity score for the target topic. A smaller site with very high topical fit can outrank a big brand that is less on-topic for that query.

| URL | Ranking position | Similarity to topic |

| TrustRank.org | 1 | 97% |

| Wikipedia.com | 2 | 82% |

| IncRev.co | 3 | 99% (!!) |

| Ahrefs.com | 4 | 72% |

| Positional.com | 5 | 94% |

Why this is interesting: Ahrefs has very high domain authority (DR 91) while IncRev is mid-tier (DR 47), yet IncRev outranks here—because the page is hyper-relevant to the topic. Topical fit can compensate for lower authority.

Ahrefs DR definition: https://help.ahrefs.com/en/articles/1409408-what-is-domain-rating-dr

What you’ll see inside Query Match (screenshots)

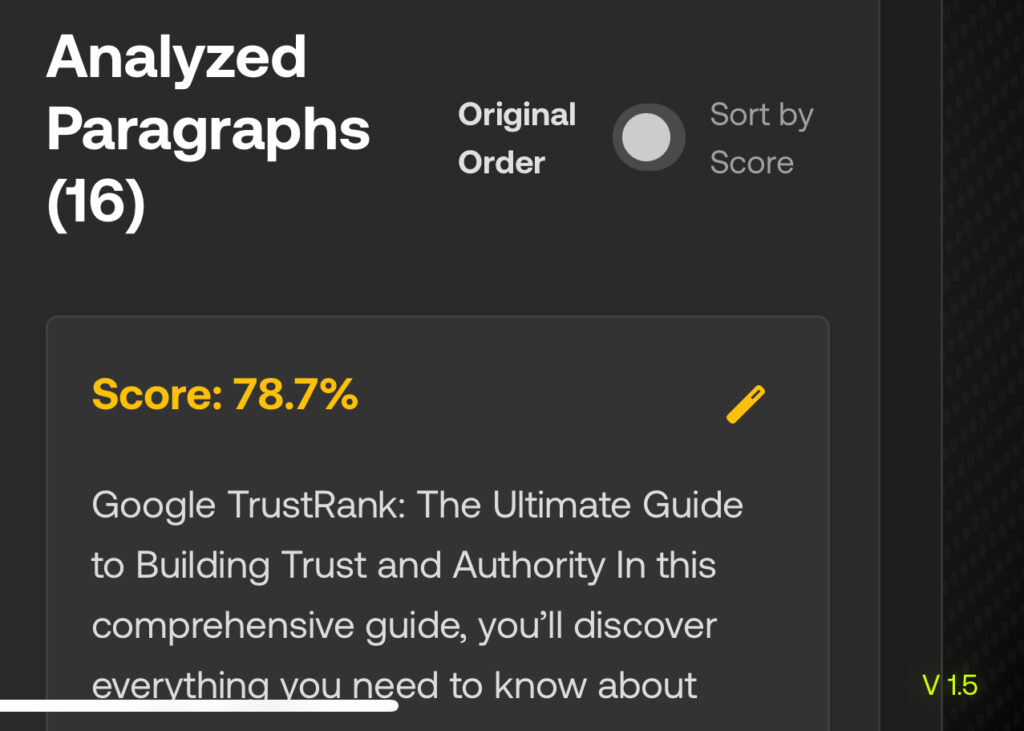

Figure 1. Query Match dashboard: page-level similarity and paragraph breakdown.

Figure 2. Paragraph-level scores with suggestions to raise low sections.

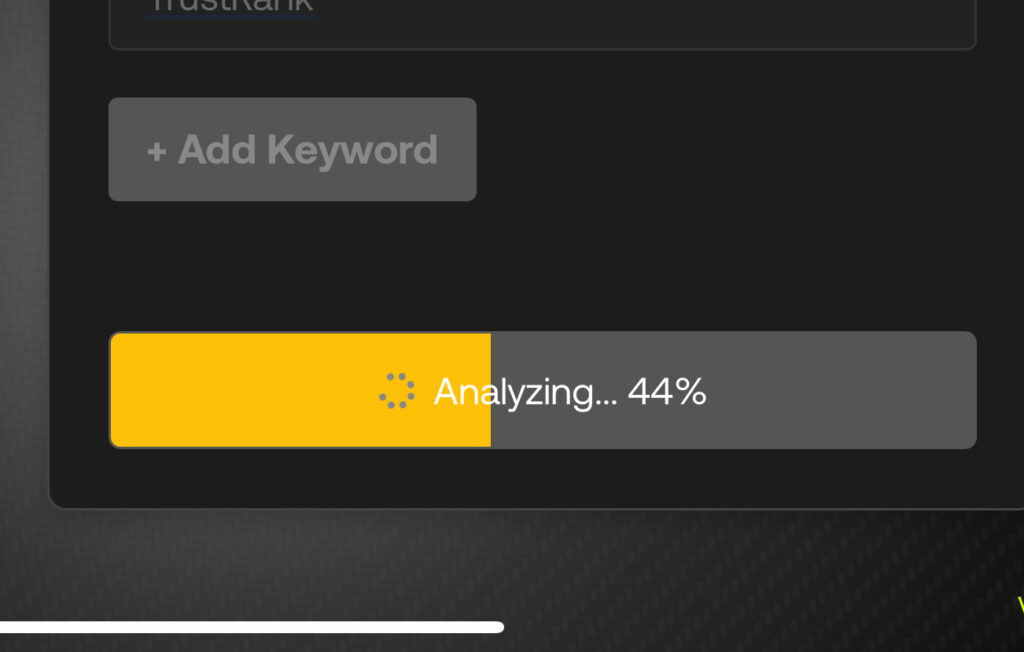

Figure 3. QueryMatch scrapes target pages in real time, transform content to vectors and calculates the analysis scoring.

Figure 4. QueryMatch is AI Powered and rewrites your paragraphs for you in real time. It runs multiple variations at the same time, testing ways to optimize your content. When it finds the best match, it delivers a new paragraph and its similarity score. The optimized content is now ready for you to publish on your website.

Popular questions

Is this just keyword density with a new name?

No. Two paragraphs can use different words and still be near each other in meaning—vectorization captures that.

Do I need code to try this?

No. Query Match does it for you. If you’re technical and used to programming and applied math, you can also try open-source models like SBERT. If not, contact us at IncRev and let us help you with Similarity Scoring.

Where this fits in AI Search (SGE, Copilot, Perplexity)

AI assistants often retrieve and quote the most semantically relevant snippets. If your paragraphs are close to the query in semantic space, you’re more likely to be pulled into answers. Structure content into citable blocks (claim → evidence → takeaway) and include the right entities (terms, standards, tools) so your paragraphs are easy to match.

Want the math?

If you’re curious about the technical side—why cosine similarity works, what SBERT is optimizing, and how this relates to classic distributional semantics—read the full paper:

Mathematical Foundations of Text Vectorization and the Sentence-BERT Algorithm (INCREV SEO Research)

Further reading

INCREV SEO Research Community

https://zenodo.org/communities/increvseo/

INCREV Academy: Google TrustRank

https://increv.co/academy/google-trustrank/

INCREV Academy: Semantic vectorization for SEO

https://increv.co/academy/seo-research/semantic-vectorization-seo